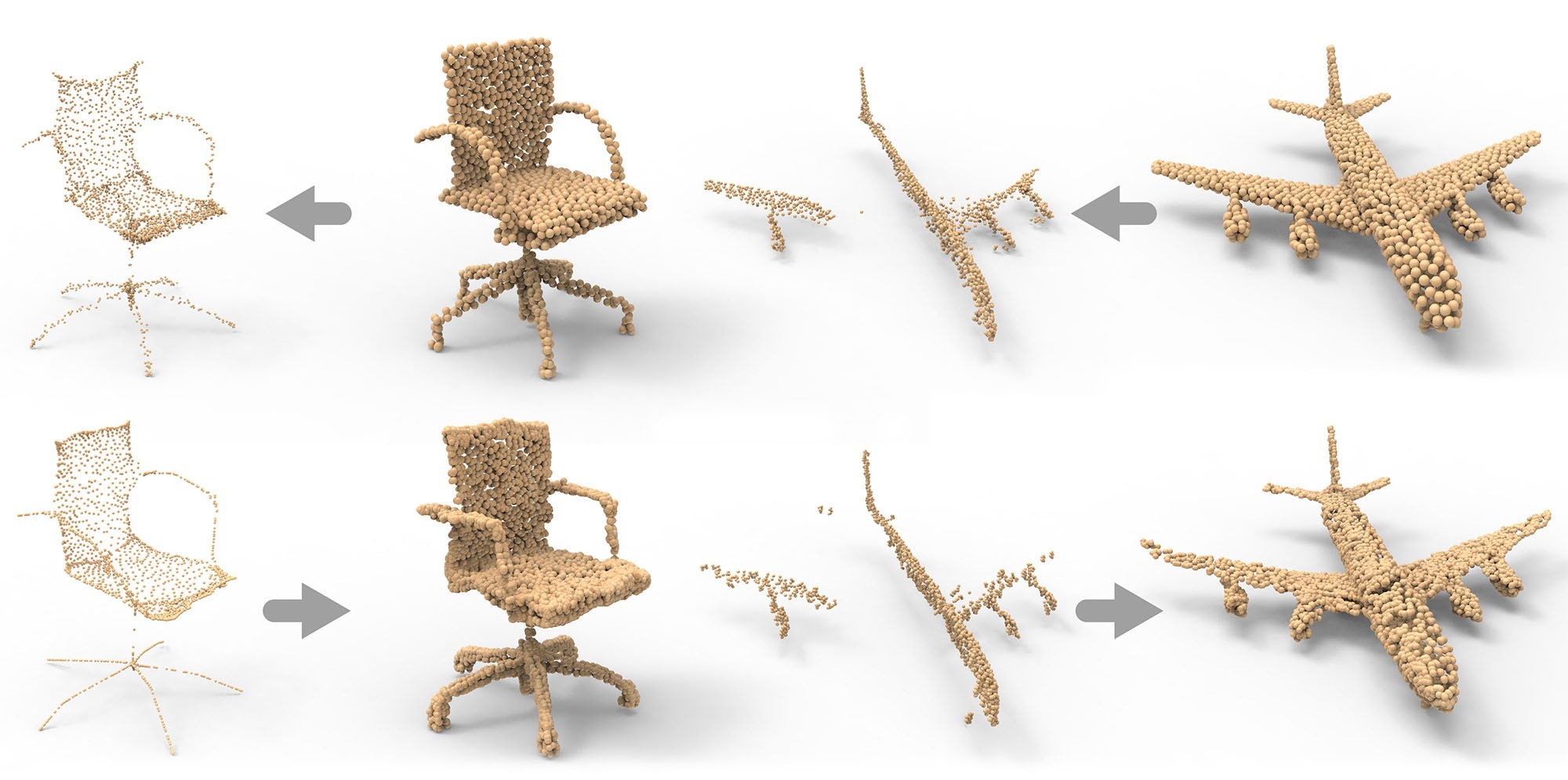

We introduce P2P-NET, a general-purpose deep neural network which learns geometric transformations between point-based shape representations from two domains, e.g., meso-skeletons and surfaces, partial and complete scans, etc. The architecture of the P2P-NET is that of a bi-directional point displacement network, which transforms a source point set to a target point set with the same cardinality, and vice versa, by applying point-wise displacement vectors learned from data. P2P-NET is trained on paired shapes from the source and target domains, but without relying on point-to-point correspondences between the source and target point sets. The training loss combines two uni-directional geometric losses, each enforcing a shape-wise similarity between the predicted and the target point sets, and a cross-regularization term to encourage consistency between displacement vectors going in opposite directions. We develop and present several different applications enabled by our general-purpose bidirectional P2P-NET to highlight the effectiveness, versatility, and potential of our network in solving a variety of point-based shape transformation problems.

@article {yin2018p2pnet,

author = {Kangxue Yin and Hui Huang and Daniel Cohen-Or and Hao Zhang}

title = {P2P-NET: Bidirectional Point Displacement Net for Shape Transform}

journal = {ACM Transactions on Graphics(Special Issue of SIGGRAPH)}

volume = {37}

number = {4}

pages = {152:1--152:13}

year = {2018}

}